The Essence of Backpropagation

Prof. Dr. Martin Bücker, Dr. Torsten Bosse

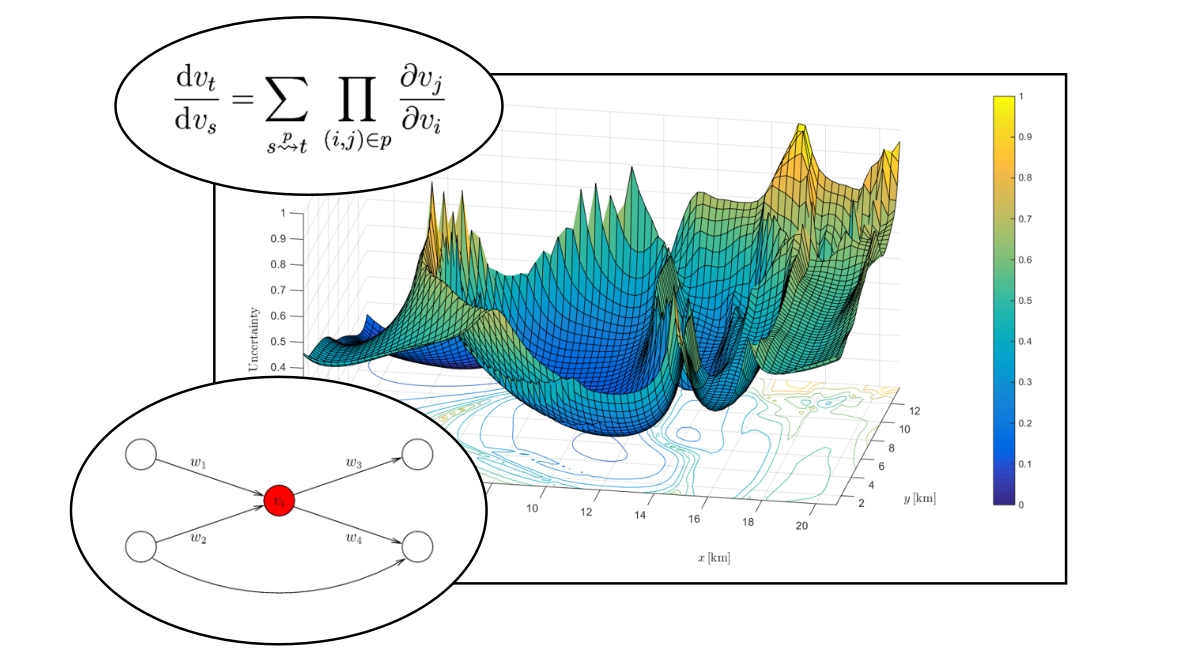

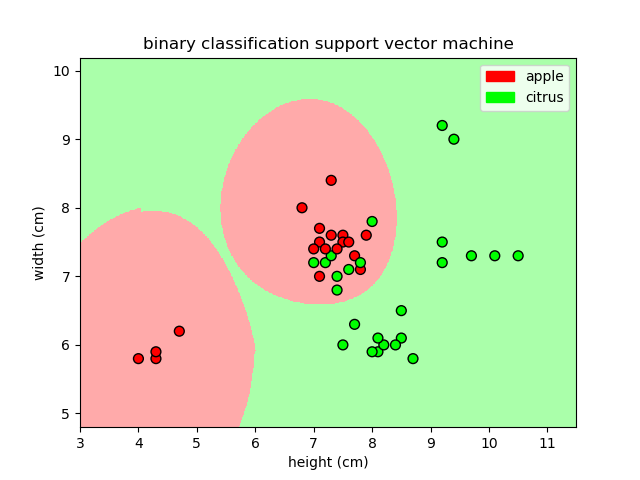

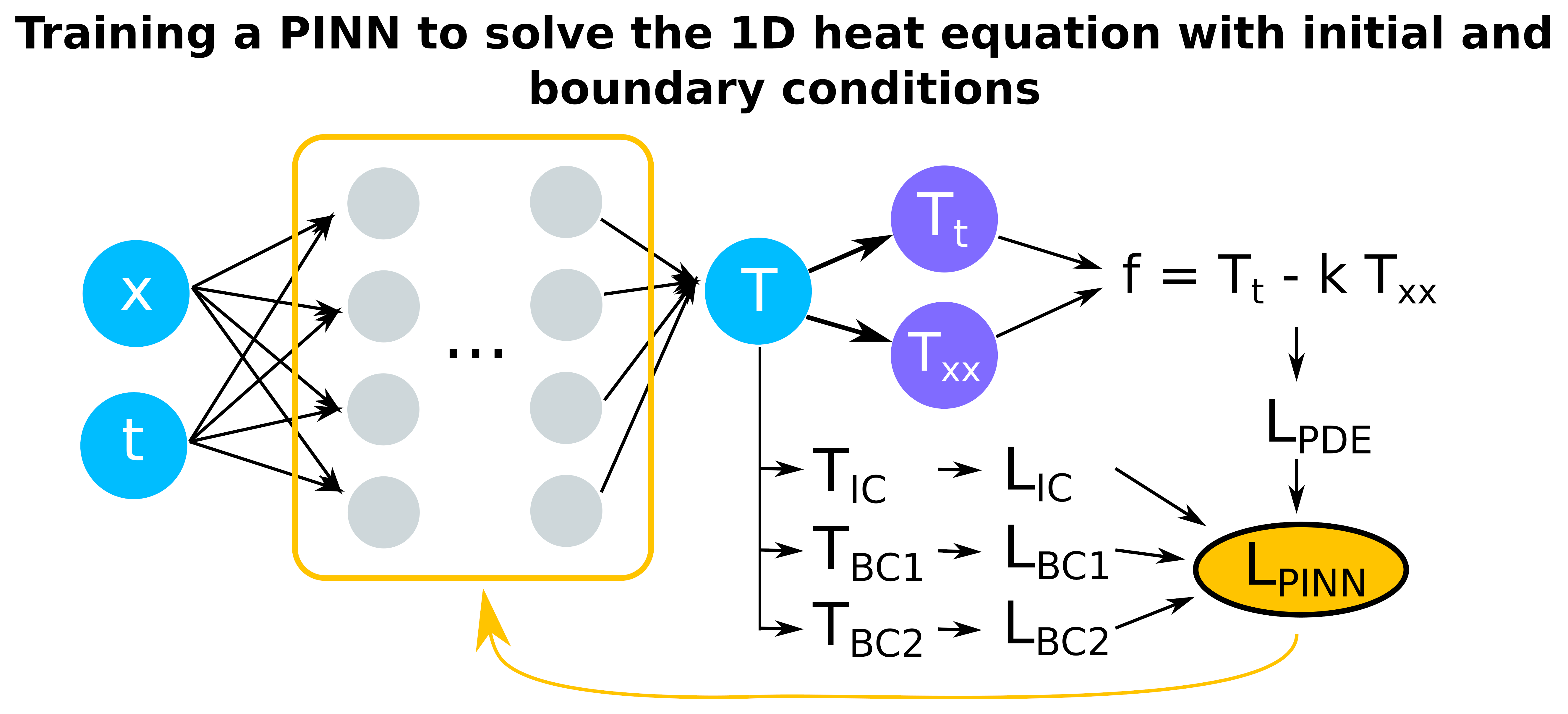

Many algorithms in artificial intelligence result from suitable formulations of mathematical optimization problems. So it comes as no surprise to learn that these algorithms rely heavily on gradients. All too often it turns out that the evaluation of gradients is the cornerstone of the whole solution process. These gradients can be efficiently evaluated by a set of powerful techniques known as automatic differentiation. The backpropagation algorithm which is frequently used to train deep neural networks is a particular instance of automatic differentiation.