Discover the diverse range of courses offered in the summer school’s program. The following abstracts provide insights into the subjects and engaging topics.

Automatic Differentiation in the Sciences

Prof. Dr.-Ing. Martin Bücker

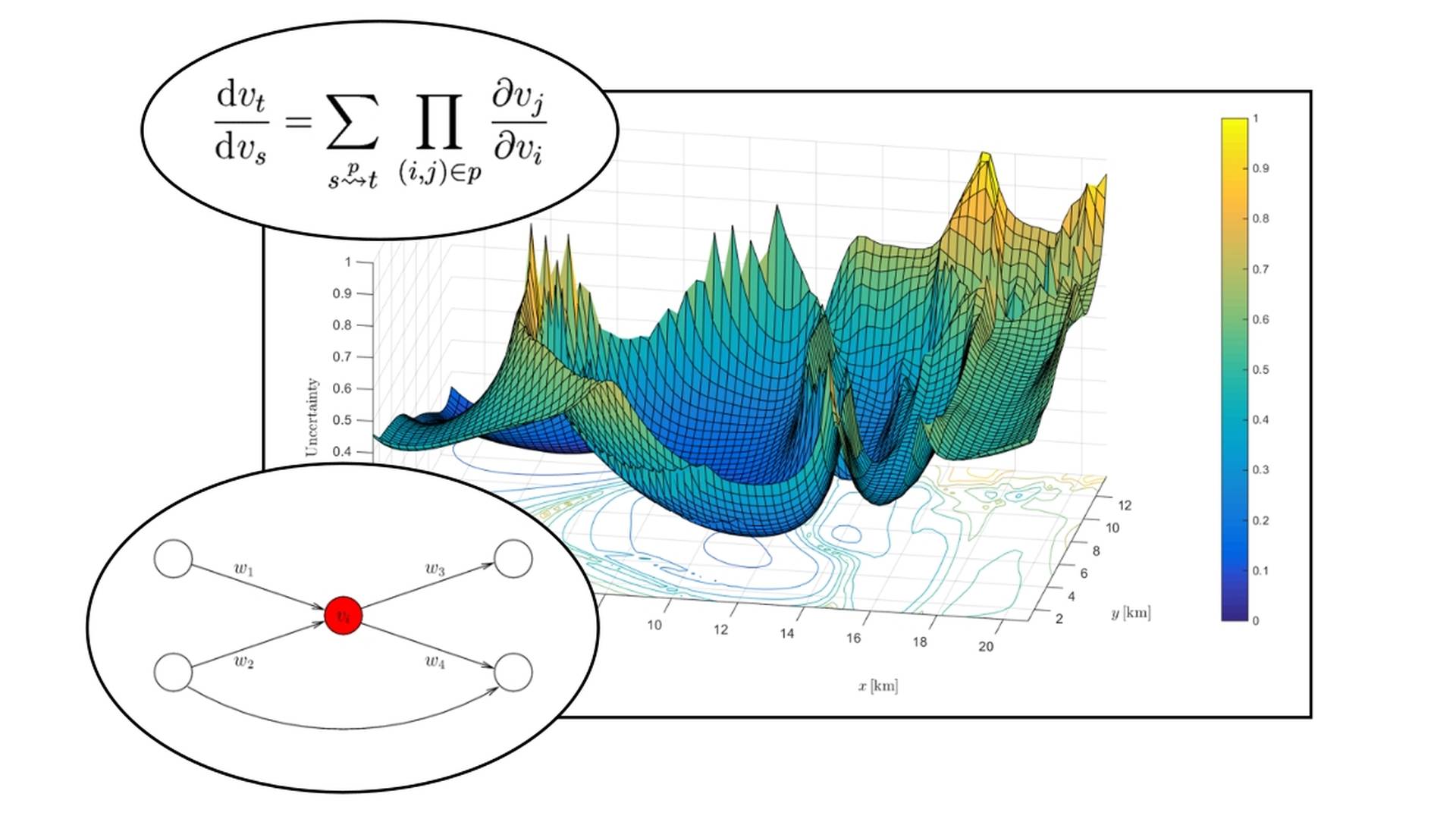

Many algorithms in artificial intelligence are based on suitably formulating continuous optimization problems. Since mathematical optimization heavily relies on first- and higher-order derivatives, it is important to study the design, analysis, and implementation of efficient and accurate techniques to compute gradient, Jacobian, and Hessian information. This tutorial gives an introduction to the rich set of powerful techniques known as automatic differentiation. These techniques are capable of evaluating derivatives for functions given in the form of computer programs. The tutorial presents the essence of two major automatic differentiation techniques. The forward mode propagates sensitivity information along the control flow of the original program. In the reverse mode, which generalizes the backpropagation algorithm frequently used to train deep neural networks, the control flow of the original program is reversed. The pros and cons of forward and reverse mode are outlined and students will compute derivatives in practical hands-on exercises.

Classification

Prof. Dr. Gerhard Zumbusch

Binary Classification Algorithms

We introduce a number of machine learning algorithms. Standard tasks in supervised learning are classification, especially binary classification and regression. Definitions, applications and relations as well as standard algorithms are covered, including nearest neighbors, Support Vector Machine, Gaussian naive Bayes, decision trees, and logistic regression. Challenges and examples in Python are given.

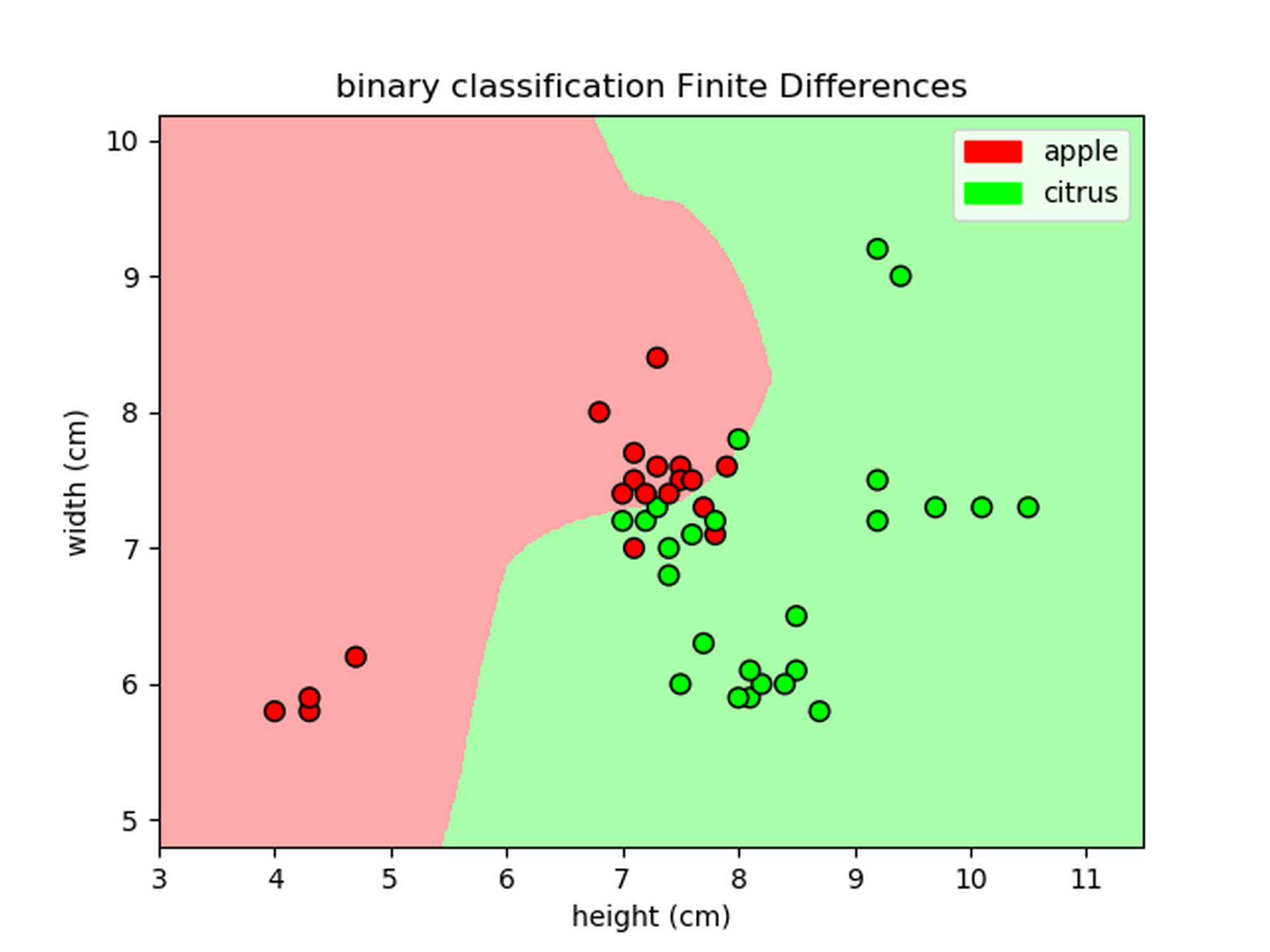

Scattered Data Approximation for Classification

We introduce the scattered data approximation problem. Standard algorithms along with a grid based method are covered. A regularized finite difference method for PDEs is constructed to solve the problem. A Python implementation for the approximation problem and an application to the classification problem is developed.

Hierarchical Grid Based Scattered Data Approximation

We discuss some improvements of the grid based scattered data approximation for regression and classification. Multigrid methods for an efficient solution of the linear equation systems and sparse grid discretizations well suited in high dimensional feature spaces are introduced. Some Python applications are shown.

Tiled Tensor Computations

Prof. Dr. Alexander Breuer

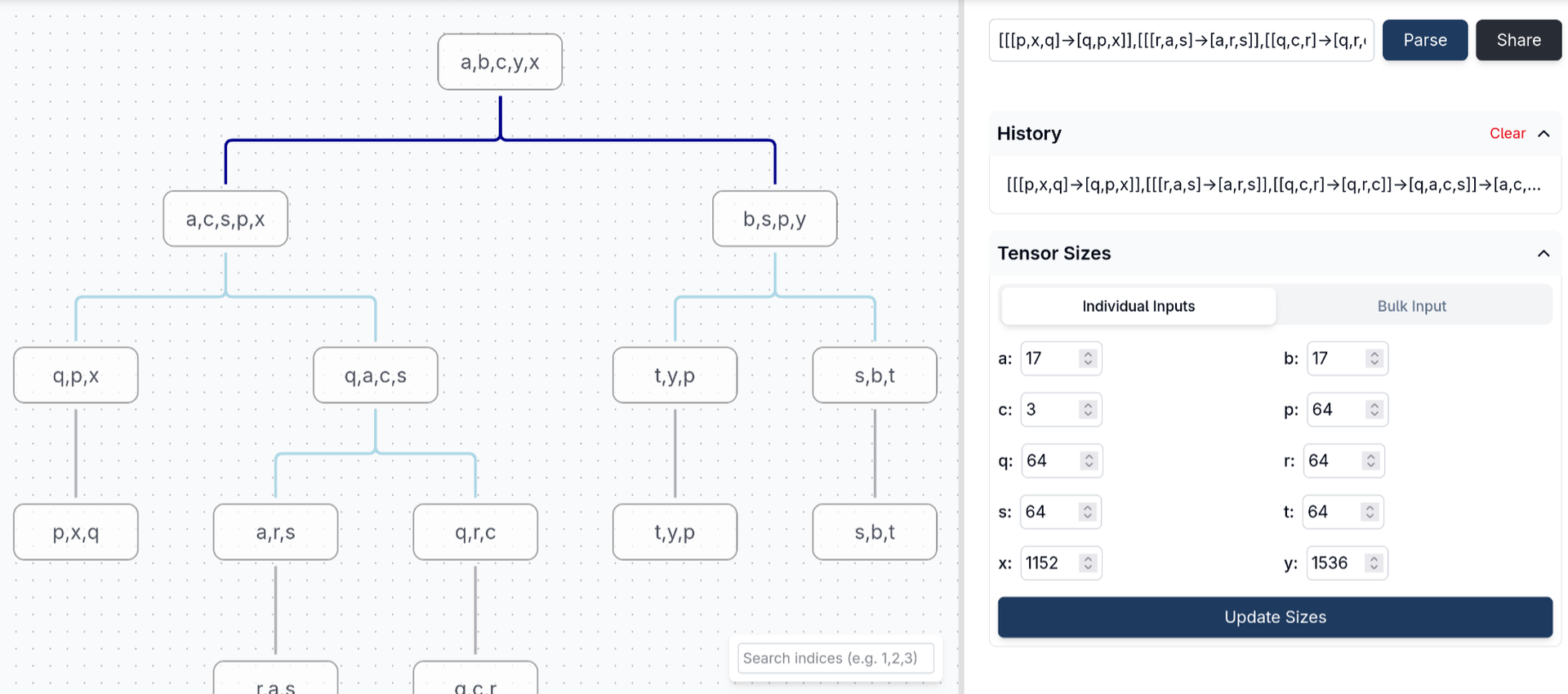

An increasing share of machine-learning software infrastructure for tensor computation is adopting tile-based approaches. Tile-based programming describes tensor operations as primitives applied to low-dimensional subtensors, called tiles. Tiles are often two-dimensional, so many primitives reduce to matrix operations.

This tutorial uses the etops package to execute tiled tensor contractions. etops uses the low-level Tiled Execution Intermediate Representation (TEIR). Conceptually, this IR is akin to emerging tile-oriented kernel languages such as Triton, cuTile and Tile IR, Pallas, and TileIR.

In the first part, we use PyTorch to execute a series of tensor contractions that reconstruct image data compressed with a tensor-ring decomposition. The second part demonstrates low-level acceleration of these contractions using the tile-based approach provided by etops.